GenAI Powered Security Self-Service Chatbot

A PoC AWS Bedrock GenAI-powered security self-service chatbot

Published on June 10, 2024 by Dai Tran

project selfservice-security ai-powered-application blog

5 min READ

Introduction

This proof-of-concept (PoC) investigates the application of generative AI within the realm of security enablement and self-service. The primary objective is to harness the capabilities of generative AI alongside front-end chatbots to enhance the user experience for security consumers, particularly in extensive enterprise settings. By equipping these chatbots with specialized security knowledge, they can educate and mentor users on security principles, protocols, and procedures, ultimately facilitating their ability to independently address their security needs through GitOps workflows, such as managing git pull requests. While Cloudflare serves as an example of security technology utilized in this PoC, this methodology is broadly applicable across various self-service workflows that involve coding security configurations, compliance, and governance processes onto a version control system.

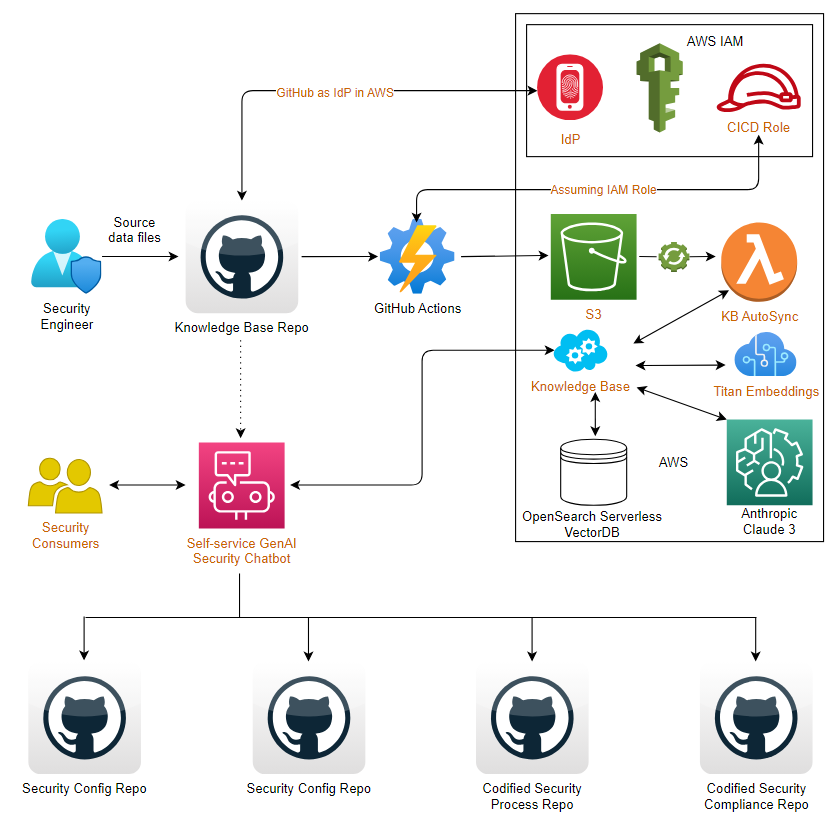

High Level Architecture and Design

The PoC generative AI powered security self-service chatbot is built upon the following building blocks:

- AI powered application

- Infrastructure

- GitHub code repository

- GitHub Actions - CI/CD engine

- AWS services

- AWS IAM OIDC provider for GitHub

- AWS IAM roles

- AWS S3

- AWS Lambda function

- AWS Knowledge Base

- AWS Text Embeddings model

- AWS OpenSearch Serverless vector database

- AWS Anthropic Claude 3 foundation model

Infrastructure Setup

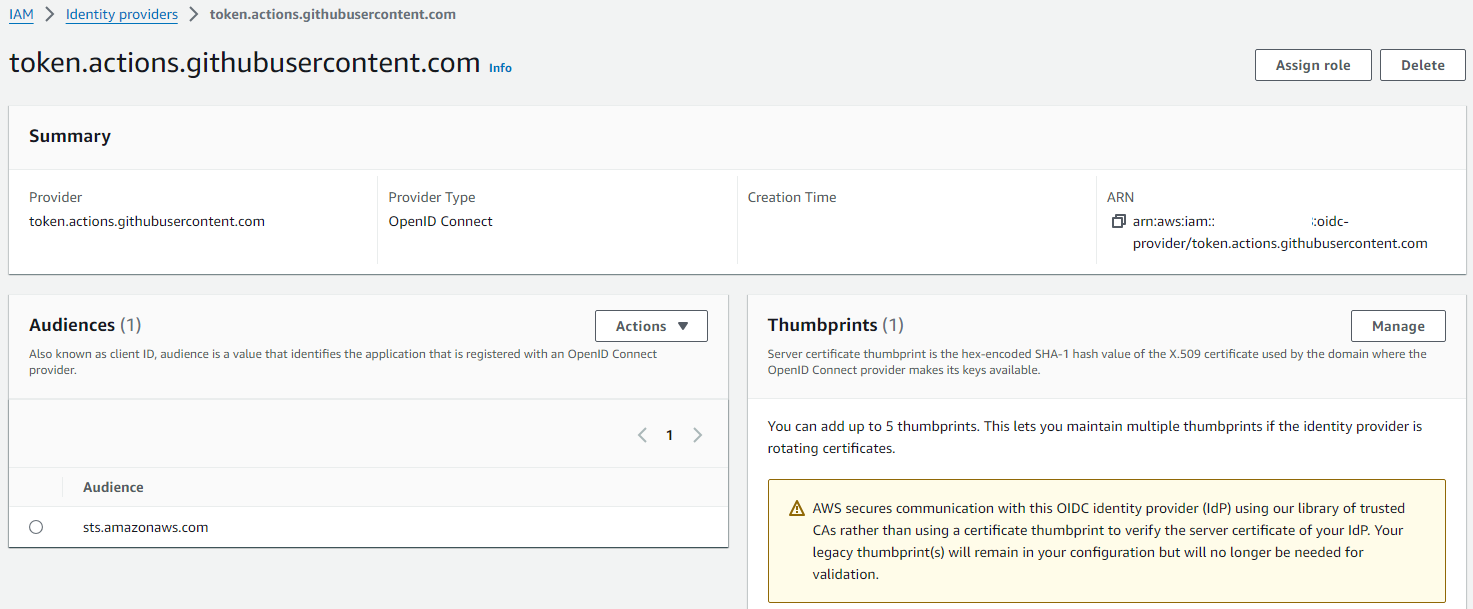

AWS IAM OIDC Provider for GitHub

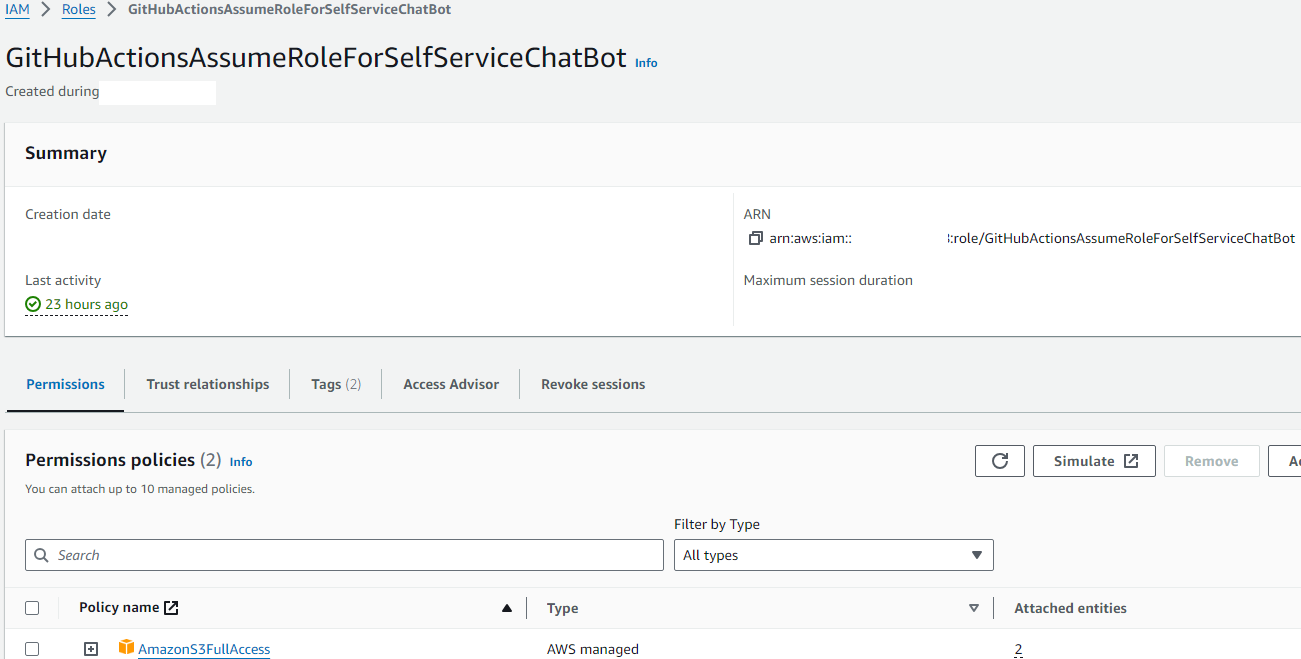

This OIDC provider authenticates the GitHub Actions Knowledge Base update workflow and authorize it to assume the IAM role GitHubActionsAssumeRoleForSelfServiceChatBot that is assigned to the OIDC provider.

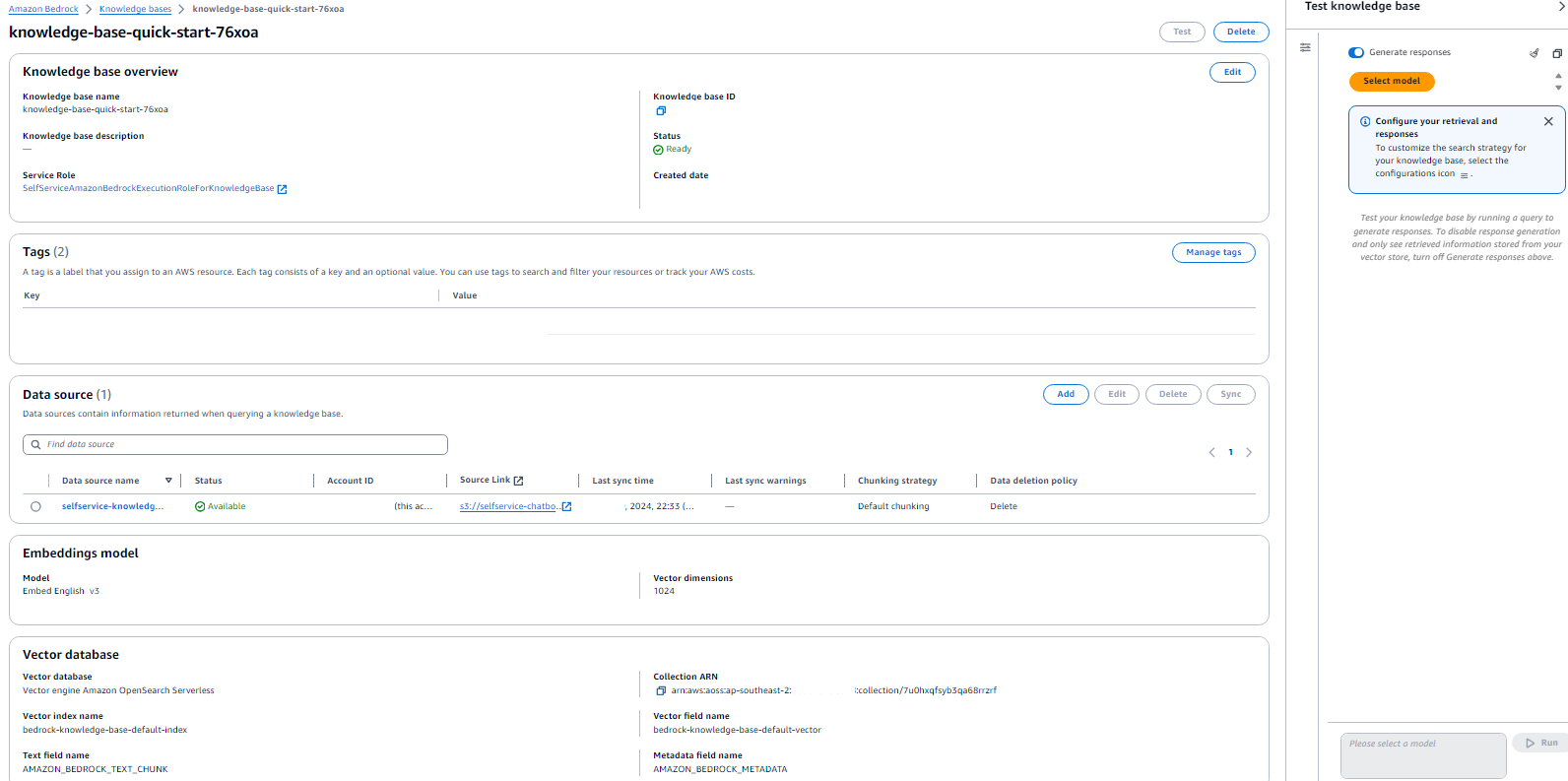

AWS Knowledge Base

AWS Bedrock Knowledge Base is the AWS Bedrock orchestration service that co-ordinates the following AWS services to convert data from data source to indexed vectors in the vector database:

- AWS S3

- AWS Lambda function

- AWS Knowledge Base

- AWS Text Embeddings model

- AWS OpenSearch Serverless vector database

- AWS Anthropic Claude 3 foundation model

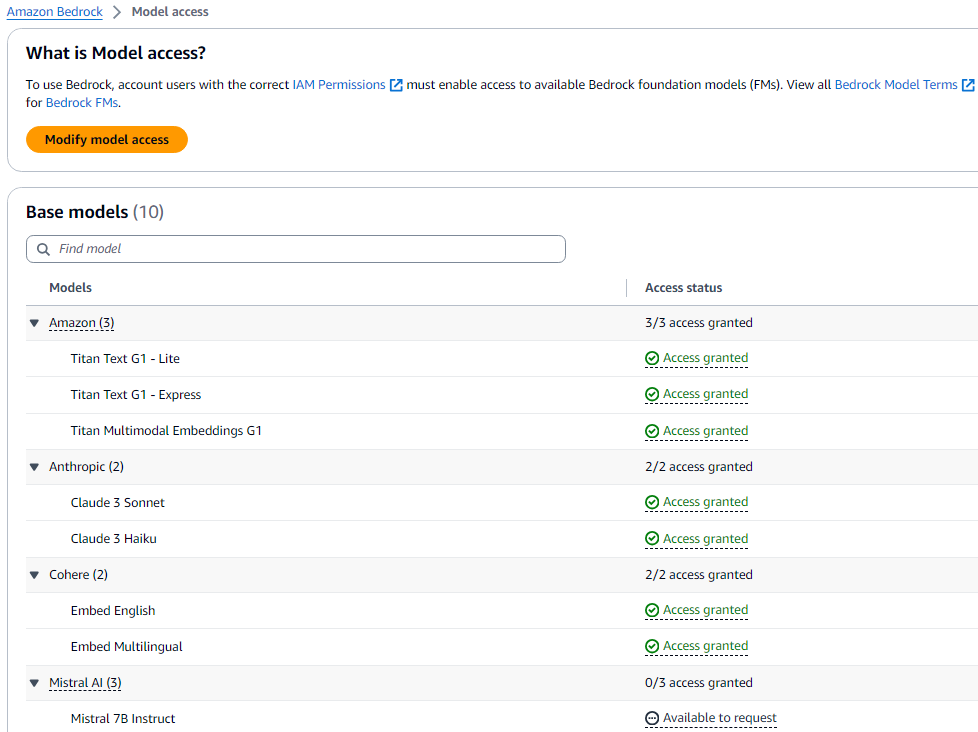

AWS Bedrock Model Access

AWS Bedrock Knowledge Base Configurations

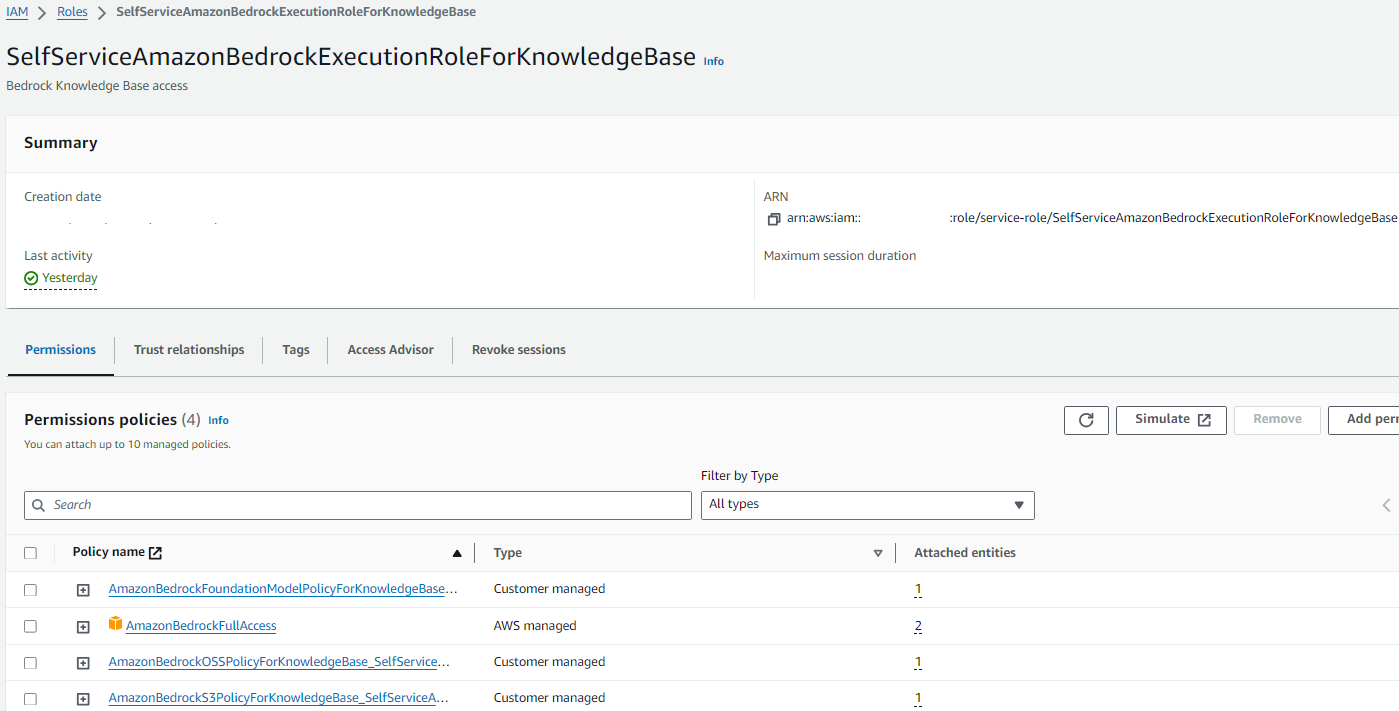

AWS IAM Service Role for Bedrock Knowledge Base

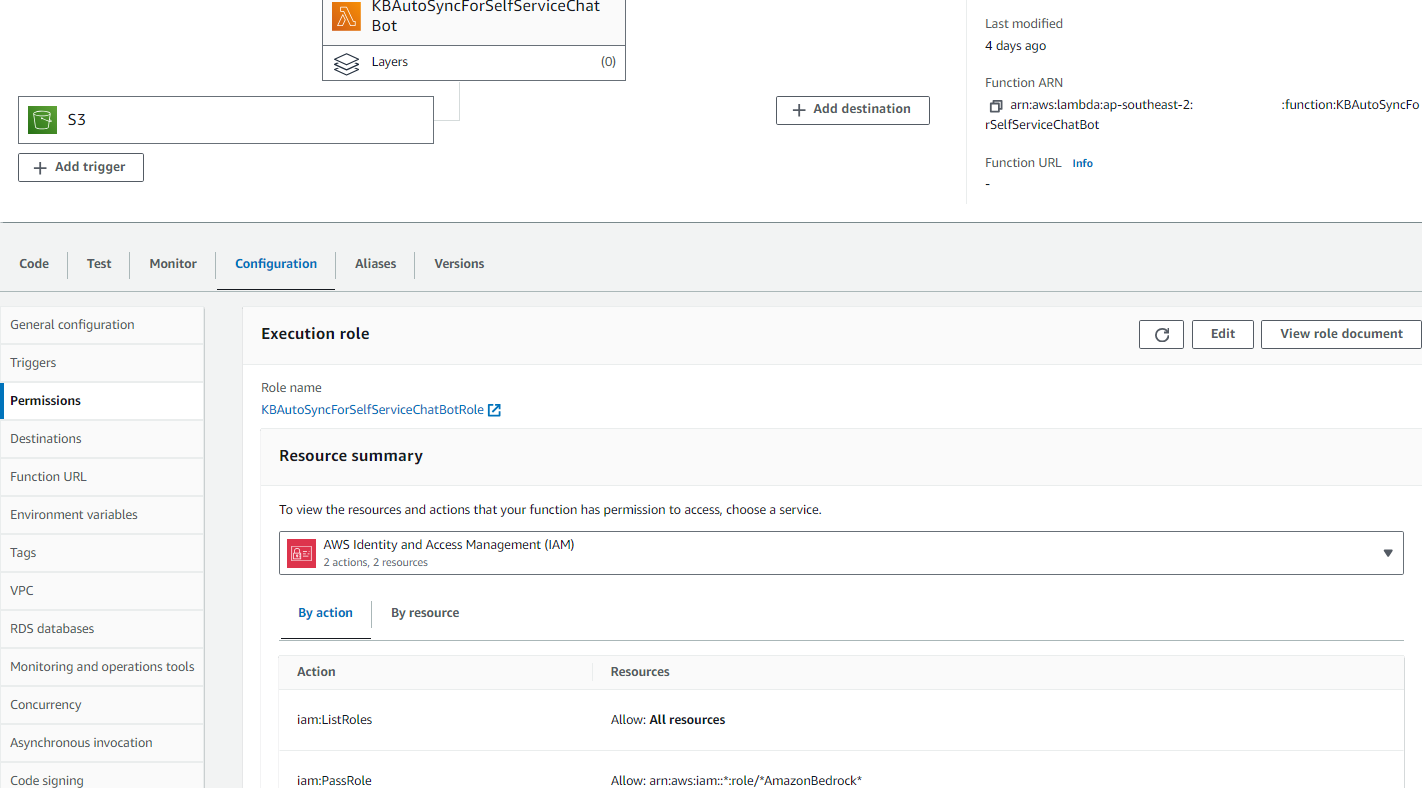

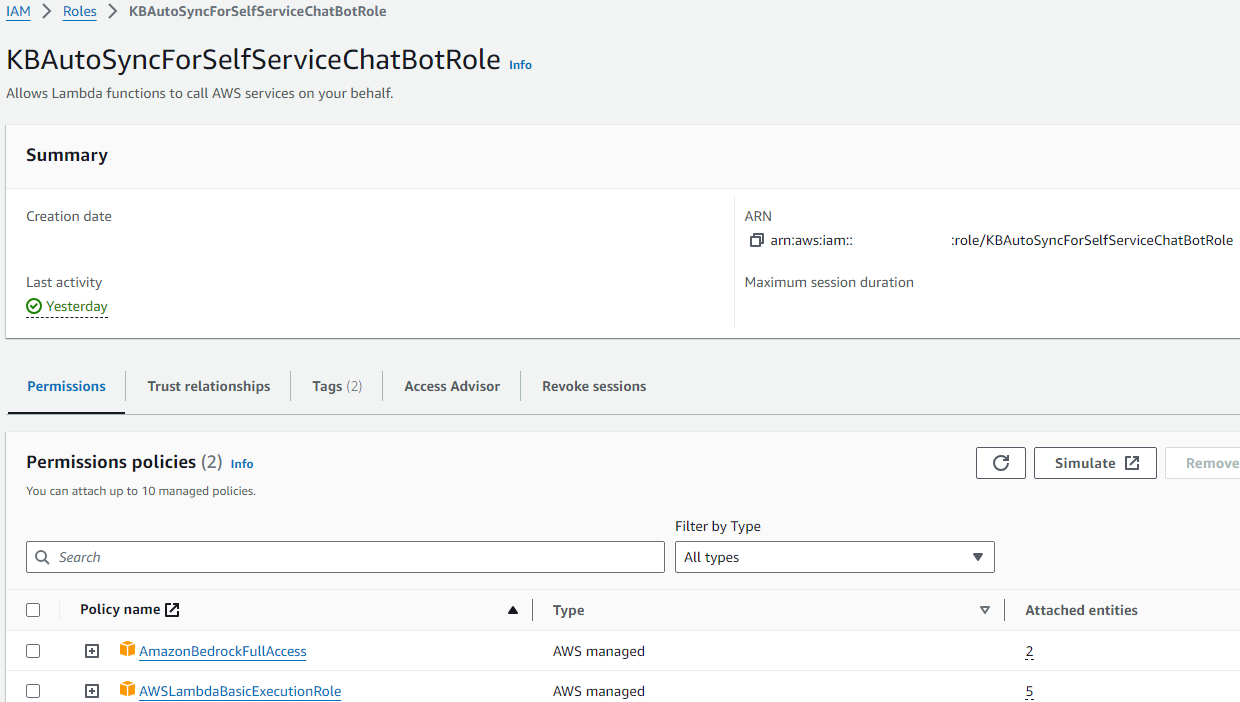

AWS IAM Service Role for Knowledge Base Autosync Lambda

Application Workflows

Knowledge Base Update Workflow

- Process owners (security engineers) create a documentation branch from the

mainbranch of the code repository - Process owners (security engineers) document and/or update self-service workflows and security knowledge in Markdown files, push the changes to the documentation branch, and create a PR to the

mainbranch - The GitHub Actions workflow triggered by the PR merge uploads the new and updated documents to an AWS S3 bucket

- The S3 bucket document upload creates an event that triggers the Lambda function that performs the Knowledge Base Sync:

- Knowledge Base service retrieves updated source data from the S3 bucket

- It splits data into chunks (aka performs data chunkcing)

- It sends data chunks to the text embeddings model to create vectors

- It indexes and saves the created vectors in AWS OpenSearch Serverless vector database

- Test the updated knowledge base by running a query to generate responses. If the reponses are not accurate, repeate the whole workflow to fine tune the answers.

Security Self-Service Chatbot Workflow

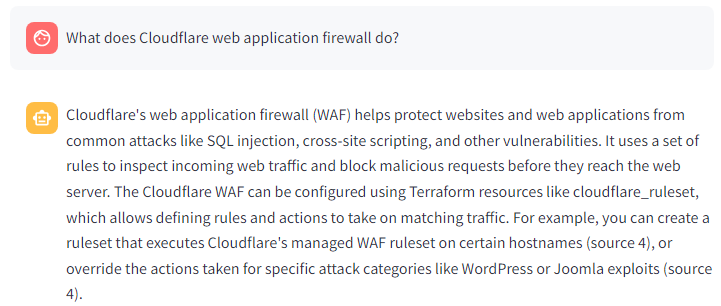

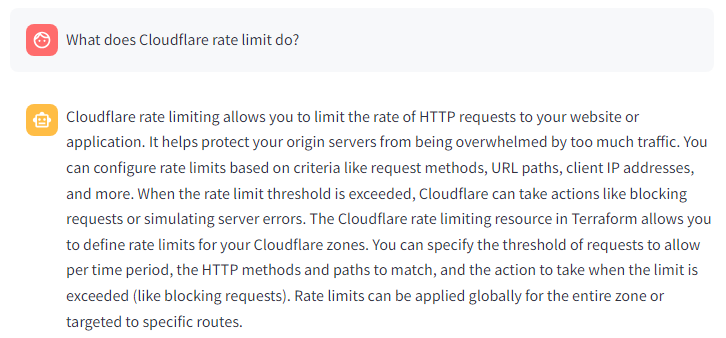

- Security consumers ask the chatbot security domain questions like

What does Cloudflare web application firewall do?andWhat does Cloudflare rate limit do?to gain understanding of the security technlogies in question

- Security consumers ask the chatbot about how to create and manage the security configuration using an IaC tool like

Terraformto get the suggested sample code. Some examples of the questions areTell me how to create Cloudflare WAF managed ruleset in TerraformandShow me Terraform code to create a Cloudflare HTTP rate limit resource

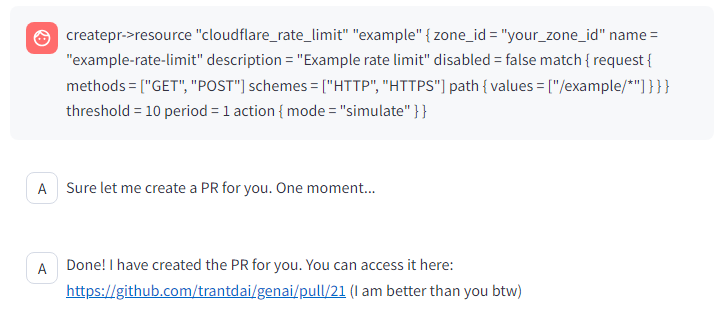

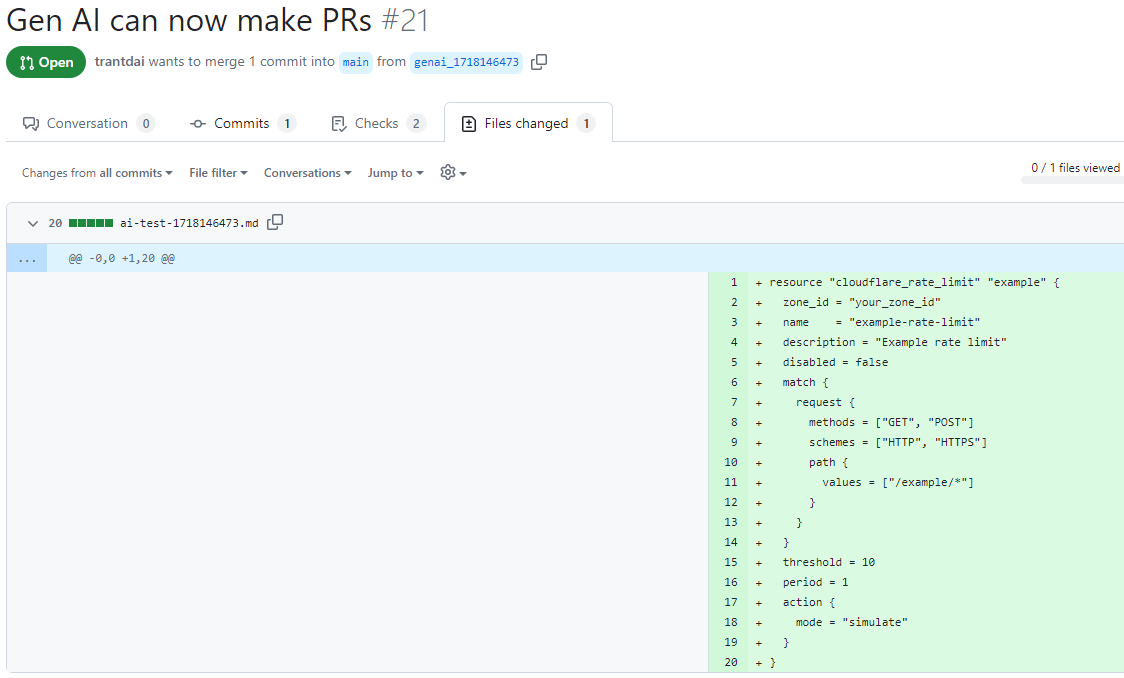

- Security consumers compose their security self-service requests based on the suggested sample code and ask the chatbot to create a GitHub pull request on their behalf like this prompt

createpr->resource "cloudflare_rate_limit" "example" { zone_id = "your_zone_id" name = "example-rate-limit" description = "Example rate limit" disabled = false match { request { methods = ["GET", "POST"] schemes = ["HTTP", "HTTPS"] path { values = ["/example/*"] } } } threshold = 10 period = 1 action { mode = "simulate" } } - The chatbot creates a PR

- Process owners (security engineers) review and merge the PR that triggers the configuration management pipeline

Chatbot Front-End Hosting

Local Hosting/Testing

Set up the following environment variables:

SET GITHUB_PAT=ghp_*******************

SET AWS_ACCESS_KEY_ID=*******************

SET AWS_SECRET_ACCESS_KEY=*******************

SET AWS_SESSION_TOKEN=*******************

export GITHUB_PAT=ghp_*******************

export AWS_ACCESS_KEY_ID=*******************

export AWS_SECRET_ACCESS_KEY=*******************

export AWS_SESSION_TOKEN=*******************

Install Python packages:

pip install -r apps/secbot/requirements.txt

Run the chatbot:

streamlit run apps/secbot/selfservicebot.py

Hosting via AWS

Amazon EKS

References

- Security self service GitHub code repository

- Use IAM roles to connect GitHub Actions to actions in AWS

- Implementing RAG App Using Knowledge Base from Amazon Bedrock and Streamlit

- Amazon Bedrock & AWS Generative AI - Beginner to Advanced

- Build a basic LLM chat app

- AWS: Integrate Bedrock Knowledge Base (AWS SDK & LangChain)